When working with large quantities of multi-dimensional data from multiple sources, dataset contamination is inevitable. Many methods have been developed to diagnose such contamination. However, unforeseen errors can sometimes sneak in, a topic discussed by CISESS Scientists Malarvizhi Arulraj and Veljko Petkovic and colleagues, based on something they noticed while doing research.

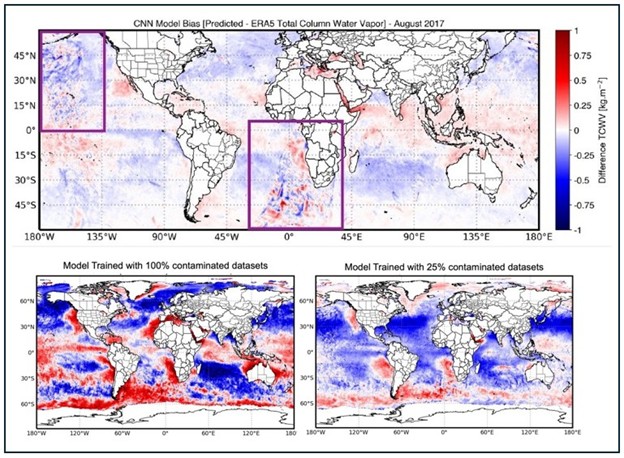

Using simulated observations of a future space-borne passive microwave sensor to develop a convolutional neural network (CNN)-based model that would predict Total Column Water Vapor (TCWV), they discovered an oversight in time matching that led to contamination of a small part of their TCWV dataset. This prompted the authors to study how changing the amount of contaminated data used for training affects how well the model performs and detects errors. They found that CNN model output does not identify data issues when trained only on contaminated data (see Figure). As the percentage of contaminated samples in the training datasets decreased, though, the model’s ability to pick up the data issue increased. They conclude that since some level of data contamination is unavoidable, users, especially benchmark dataset developers, should carefully examine the data going into their models to understand its quality.

Citation: Arulraj, Malarvizhi, Veljko Petkovic, Huan Meng, and Ralph R. Ferraro, 2025: Lessons learned: Can machine learning model expose dataset contamination? Artif. I. Earth Syst., accepted, https://doi.org/ 10.1175/AIES-D-25-0030.1.